|

|

|

| Introduction | TLAM | Resource Management | Reflective Communication | Architecture |

| Introduction |

CompOSE|Q is a customizable and safe distributed systems middleware infrastructure, built to provide cost effective QoS-based distributed resource management. It allows concurrent execution of multiple resource management policies in a distributed system in a safe and correct manner. This allows safe integration of resource management mechanisms for services such mobility, load balancing, fault tolerance and end-to-end QoS management. It is based on a two level meta-architectural model that facilitates specifying and reasoning about the composability of multiple resource management services in Open distributed systems. The CompOSE|Q reflective framework uses Actors, a distributed computing paradigm that uses a model of concurrent active objects and has a built-in notion of encapsulation and interaction among the concurrent components of an Open Distributed System. In the actor paradigm, the universe contains computational agents called actors, distributed over a network. Traditional passive objects encapsulate state and a set of procedures that manipulate the state; actors extend this by encapsulating a thread of control as well. Each actor potentially executes in parallel with other actors and may communicate with other actors via asynchronous message passing. Using Actors, we define a meta-architecture framework that permits customization of resource management mechanisms such as placement, scheduling and synchronization.

The CompOSE|Q architecture contains:

- The basic composable core services - Remote Creation, Distributed Snapshot and Directory Services with interaction constraints that ensure their concurrent execution with each other and other meta level services.

- Services built using core services - Actor migration, replication of services and data, actor scheduling, distributed garbage collection, name services etc. Each of these services has its own interface definitions and interaction constraints.

- QoS enforcement mechanisms.

| back to top |

| The Two Level Meta Architectural Model (TLAM) |

Ensuring correctness in a purely reflective model involves reasoning about system level interactions by characterizing the semantics of shared distributed resources and understanding what correctness of the overall system means. The TLAM (Two Level Actor Machine) model was presented as a first step towards providing a formal semantics for specifying and reasoning about properties of and interactions between components of ODSs. In the TLAM, a system is composed of two kinds of actors, base actors and meta actors, distributed over a network of processing nodes. Base level actors carry out application level computation, while meta-actors are part of the runtime system, which manages system resources and controls the runtime behavior of the base level. Meta-actors communicate with each other via message passing as do base level actors, but they may also examine and modify the state of the base actors located on the same node. The TLAM uses reification (base object state as data at the meta object level) and reflection (modification of base object state by meta objects) with support for implicit invocation of meta objects in response to changes of base level state. It provides for full actor-style interaction of meta level objects. In the TLAM model, meta-level controllers define protocols and mechanisms that customize various aspects of distributed systems management. In practice, multiple system and application activities occur concurrently in a distributed system, e.g. scheduling, protocol processing, stream synchronization etc., and can therefore interfere with each other.

|

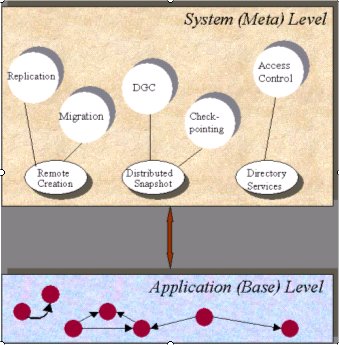

To ensure non-interference and manage the complexity of reasoning about components of ODSs in general, our strategy is to identify key system services where non-trivial interactions between the application and system occur, i.e. base-meta interactions. We refer to these key services as core services. Core services are used in specifying and implementing more complex activities within the framework as purely meta-level interactions. The development of suitable non-interference requirements allows us to reason about the composition of multiple system services; these services have constraints that must be obeyed to maintain composability (i.e. safe concurrent execution). We use commonly observed patterns in distributed systems to identify three meta level core activities (See Figure): Remote Creation (for migration, replication & load balancing), Distributed Snapshot (for check-pointing, distributed garbage collection, etc.) and Directory Services ( for access control, resource discovery and group communication). |

| back to top |

| The Resource Management Services |

Implementation of sophisticated policies and mechanisms for QoS management is made possible by providing support for common services in CompOSE|Q. For instance, object scheduling mechanisms use the basic remote creation core service to assign newly created objects/actors on nodes with adequate resources. Using generalized state capture facilities, we are developing a checkpointing service for capturing causal orders of executions in the system that can be used for monitoring and debugging distributed computations. A state broadcast mechanism is used to implement a clock synchronization service, which informs nodes about a global time value that can be used for time related services.

-

Remote creation : Remote creation is the process by which actor creation occurs on a specified node other than the node from which creation is being initiated. Remote creation is a basic facility that can be used in other resource management activities like load-balancing, replication and migration. By encapsulating the interactions between the application and system level actors within the remote creation service, we can state requirements that ensure safe and correct composition of other resource management activities with remote creation. In a real TLAM based implementation, the control activities of remote creation are managed by remote creation meta-actors (RCM) residing on every node in the system. A remote creation request has four parameters - a description of the fragment desc to be migrated, the remote node (N), any initial state the desc has to be set to and the initiating-actor 'b'. The initiator actor 'b' is maintained by the RCM to ensure composability with other meta-level services[V98]. If the requester needs to know that the request has been completed, or the names of some of the newly created actors, then this can be achieved by specifying appropriate messages as part of the requested fragment, and observing their delivery.

-

Distributed Snapshot Services: Global properties like the number of application-actors, the current reachability graph of distributed actors, number of messages being processed and task queue sizes help in making runtime decisions like load balancing, migration and garbage collection, leading to efficient runtime management of a distributed system. To fully represent the global state of the distributed system, we need a mechanism for recording the state of all nodes including the portion of node state being communicated in the network channels. As state information is accessible explicitly only in nodes, a snapshot mechanism must ensure that node state information in channels are recorded at some node in the system (possibly the target node itself). The snapshot mechanisms we have devised are such that application-level computation and system level services proceed concurrently with the snapshot, thereby preserving application and service semantics. In order to initiate snapshot recording on every node and force messages in channels to reach a node, we have defined two wave protocols for message propagation that (a) visit all nodes exactly once, capturing node-resident information and (b) traverse all links in the system exactly once forcing messages on channels to reach nodes (where their state can be recorded).

-

Migration: By using remote creation as the basis for migration, we have ensured composability of migration with other meta-level services such as reachability snapshots and distributed check-pointing. Migration is the process by which actors move from one node to another. The migration service allows for relocation of actors for easier access, availability and load balancing. A migration request is given by a pair (α,n), where α is the actor to be migrated, and n is the destination node. This is interpreted as a request to move the computation carried out by α to the node n. In order to state explicitly invariants maintained by the system during the migration process, we classify the migration process into 3 phases with respect to the actor being migrated and the node to which it is being migrated. The first phase is the initiation phase and specifies the state of the system when the migration request received can be processed. It determines the computation to be migrated by suspending the computation of the actor and noting its current description. In the second configuration the actual actor migration is performed using the RC service. The last configuration finalizes the migration process and establishes transparent access to the migrated actor.

-

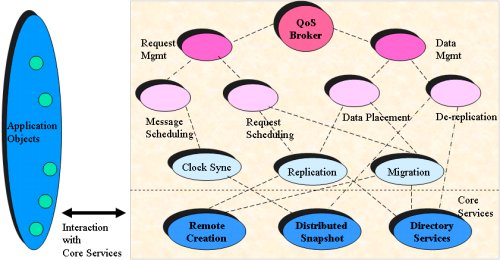

QoS Brokerage Service: This work illustrates the use of TLAM services in the design of mechanisms and policies needed to enforce QoS constraints in the actor-based runtime environment. We extend the basic meta-architectural framework to provide QoS based services to applications. The base level component of the meta architecture implements the functionality of the distributed session and deals with (a) data, which includes objects of varying media, types, e.g., video and audio files and (b) requests to access this data via sessions. The meta-level component deals with the coordination of multiple requests and sharing of existing resources among multiple requests. To provide coordination at the highest level and perform admission control for new incoming sessions, a meta-level entity called the QoS broker is being developed. The organization of the meta-level services in CompOSE|Q is illustrated in the Figure.

The two main functions of the QoS broker are (a) data management and (b) request management. The data management component decides the placement of data in the distributed system, i.e. it decides when and where to create additional replicas of data. It also determines when additional replicas of data actors are no longer needed and can be garbage collected/dereplicated. We implement adaptive admission control mechanisms in the request-scheduling module that assigns requests to servers and ensures cost-effective utilization of resources. The message-scheduling module ensures QoS constraint satisfaction of requests that have already been initiated. The data and request management functions in turn require auxiliary services such as clock synchronization, replication, dereplication and migration. So far, we have focused on the following services:

-

Replication: to replicate data and request actors using adaptive and predictive techniques for selecting where, when and how fast replication should proceed.

-

Dereplication: to dereplicate/garbage-collect data or request actors and optimize utilization of distributed storage based on current system load and expected future demands for the object.

-

Migration: to migrate data or requests for load balancing, availability and locality. The interaction of migration with timing based QoS constraints is an interesting issue since it can introduce playback jitter in MM applications caused by explicit teardown and re-establishment of network connections.

The auxiliary services described above are developed using one or more of the core services - remote creation, distributed snapshot and the directory service. In order to ensure non-interference among the auxiliary services that are used to provide QoS, the specific mechanisms implemented for placement and scheduling must be designed not to conflict with each other. Currently, placement and dereplication operate on the basis of a (conservative) snapshot of the current resource allocation and use. The placement and dereplication services do not consider the exact times at which requests arrive; in contrast, an adaptive request scheduling process makes decisions based on the exact arrival times of requests. However, without appropriate constraints on the usage of these services, inconsistencies can arise due to their interaction. The broker coordinates the service interaction by constraining the behavior of the auxiliary placement and scheduling services. For instance, the dereplication service does not dereplicate a replica that the request scheduling process is making an assignment to. Furthermore a replica assigned to an active request should not be physically dereplicated. The broker also ensures that the dereplication and placement metalevel services do not cancel one another out. While the interaction between dereplication and placement is not a functional correctness issue, it has to do with cost-effective performance of the overall system.

| back to top |

| The Reflective Communication Service Architecture |

In order to provide correct composition of communication services to QoS-based applications in a transparent and scalable fashion, while ensuring correctness of basic middleware services in a meta level architecture for distributed resource management (e.g. garbage collection, remote creation), the TLAM model is extended with a composable reflective communication framework (CRCF), which customizes the base level communication services among a group of objects as follows . Each base level actor has a meta level actor, called messenger, which serves as the customized and transparent mail queue for that base level actor. There is one communication manager in every node of the distributed system, which implements and controls the correct composition of communication services specified by the messenger. The messenger has four message queues: the up and down queues are used to communicate with its base level actor, serving as the actor’s send buffer and customized mail queue respectively, while the in and out queues are used for interaction with the communication manager, requesting communication services that satisfy QoS constraints. The up and down queues hold raw messages from and to base level actors, while the out and in queues hold processed messages, which are messages with the required protocols enforced. Furthermore, the communication manager has a set of communication protocol actors, each of them implementing a particular communication service provided by the framework (e.g. reliable protocol, in-order protocol). Communication services can be added (plugged in) or removed (plugged out) dynamically without side effects. The above scheme allows us to abstract a core set of communication services and share it between the different messengers on a node, simplifying the synchronization and composition process, while encouraging separation of concerns in the process of message transmission and reception. In order to maintain accurate semantics and provide an efficient implementation of the architecture, the communication manager implements a set of meta level representatives, called pool-actors. At any instance, the pool-actor handles the communication services requested by a messenger for an individual message. In other words, every message requiring communication services is assigned a pool actor. The pool actor assures the correct order of composition of required services and provides a coordination mechanism between the messenger that requires the services and the protocols that provide it. This concept of reusable pool-actors is an efficient way to handle the service request of each messenger without having to pay the bottleneck associated with the centralization of the services in the node communication manager. In summary, the notion of pool actors provides separation of concerns and manageable concurrency in the communication process.

| back to top |

| CompOSE|Q Runtime Architecture |

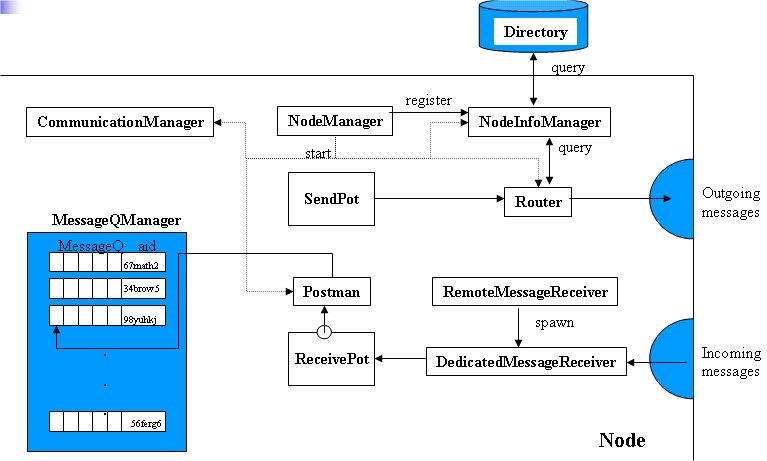

The current middleware environment has been implemented using Java, due to its many favorable features such as its portability across a wide variety of platforms, wide user base and support for flexibility through introspection. In our approach, we suitably 'constrain' the Java programming language to achieve Actor semantics. In order to assist the three core services in achieving their task easily and efficiently, the run-time system consists of:

-

A NodeManager that manages and coordinates various components on a node.

-

A NodeInfoManager that manages information needed by the local actors and interfaces with the directory service.

-

A communication sub-system that handles messaging between actors.

-

The NodeManager: Each node running CompOSE|Q has one NodeManager to manage actors on that node, as well as to start-up and shutdown various other modules of the run-time system. When a new actor is created it registers itself with the NodeManager and is identified by an Actor ID (aid). The NodeManager enters the new actor into a local-table which helps keep track of the actor for activities such as node checkpointing and node shutdown and notifies the MessageQmanager entity, which allocates a message queue that serves as the “in” Queue for the actor. The MessageQManager is responsible for managing mail queues for all actors on a node. To start CompOSE|Q on a node, the NodeManager has to be started first. It in turn, initiates the various other modules and communication components.

-

The NodeInfoManager: The NodeInfoManager is a repository of information as well as an interface to the main directory service in the distributed architecture. Currently, the NodeInfoManager implements basic functionality to: 1) Register an actor with the directory service so that it is accessible to all other nodes 2) Search for a particular actor to find out which node that actor is currently on and 3) Search for an actor object given the class name (a rudimentary naming service). The NodeInfoManager has a local-table, which contains references to all local actors (updated and maintained by the NodeManager) and a remote-actor cache that contains information about recently accessed remote actors.

-

The Communication Subsystem: The communication transport layer and the CRCF module(above the transport layer), together compose the node communication subsystem. The message transport layer provides a framework for sending the outgoing messages to the appropriate node (routing) and resolving incoming messages to their appropriate actor queues (message-resolution). The CRCF module is responsible for the implementation of communication services (and their composition). Separation of these layers allows for independent customization of protocol implementations and the messaging runtime. This facilitates correct composition of protocols without interfering with the runtime communication semantics. The communication transport layer consists of the following components: a Router, a Postman and a RemoteMessageReceiver. The transport layer maintains two message queues on a node for incoming and outgoing messages (on that node) called SendPot and ReceivePot respectively. The Router consults the NodeInfoManager to obtain the current location (node) of the target actor. If the location of the target actor is local (i.e. on the same node), the Router puts the message directly into the node's ReceivePot. Otherwise, it sends the message to the remote node. The RemoteMessageReceiver (RMR) on the target node extracts the incoming message and puts it into the node's ReceivePot. The Postman then picks up the message and adds it to the target actor's “in” Queue. The communication manager is instantiated in each node during system startup. When an actor is created and protocol composition services are not desired or required ts messenger is not created and the actor sends and receives raw messages using the transport layer. When flexible communication is required or desired, an independent messenger is created for every base level actor and the entire CRCF functionality is invoked. The overhead of the CRCF module is minimized in case of communications with no protocols attached. In this scenario, we tunnel raw messages through the actor's messenger directly to the transport layer.

| back to top |