Running your jar on openlab

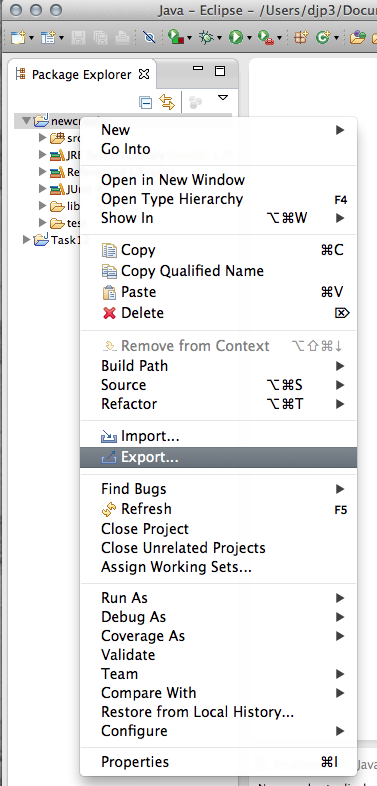

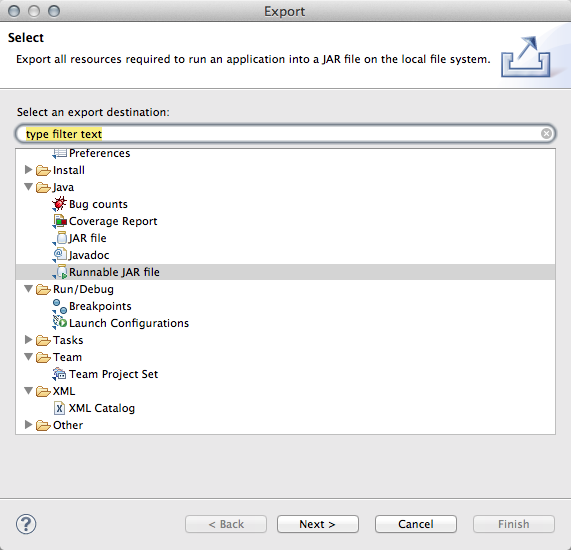

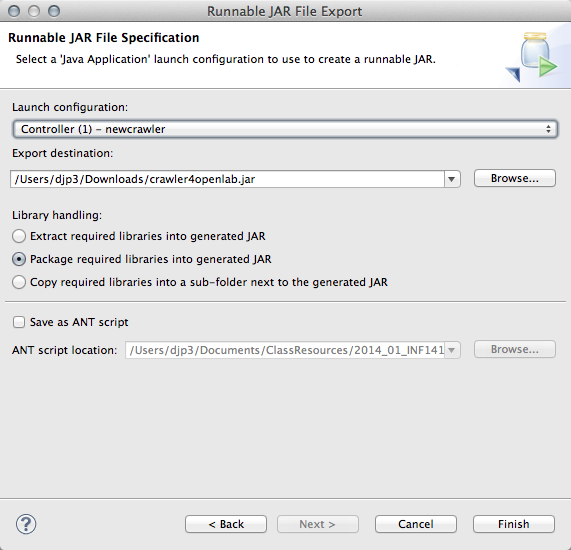

- Once you have tested your crawler on your local machine in Eclipse, export your project as a "Runnable Jar". A runnable jar packages all the required libraries into one big .jar file so you don't have to deal with moving them separately. That's convenient, but it probably violates licensing agreements. Don't do that with code you plan on distributing. The launch configuration is asking what "main" you want the runnable jar to start from. If you tested your program in Eclipse, you should have an option in the dropdown

- Make sure you update any file paths in your program for the openlab file hierarchy. It's different than your local machine

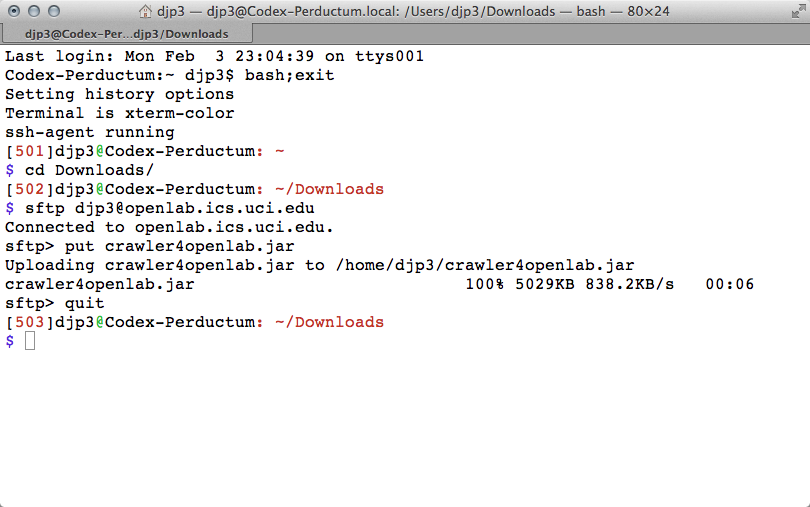

- Now you have to move the jar you just made to openlab

- On a mac you can do that with "sftp" from a Terminal. On windows use WinSCP

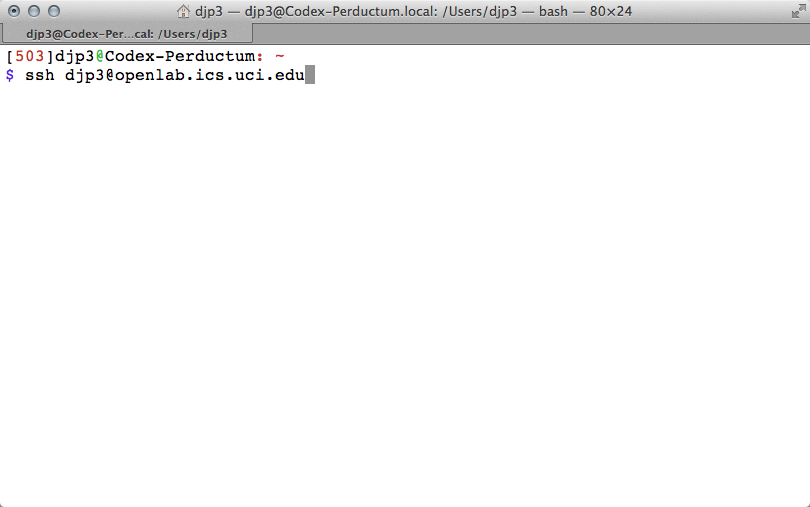

- Now you need to open a terminal window on the openlab cluster of machines.

- I use "ssh" to login to the remote machine. If you are on Windows you will need to use PuTTY

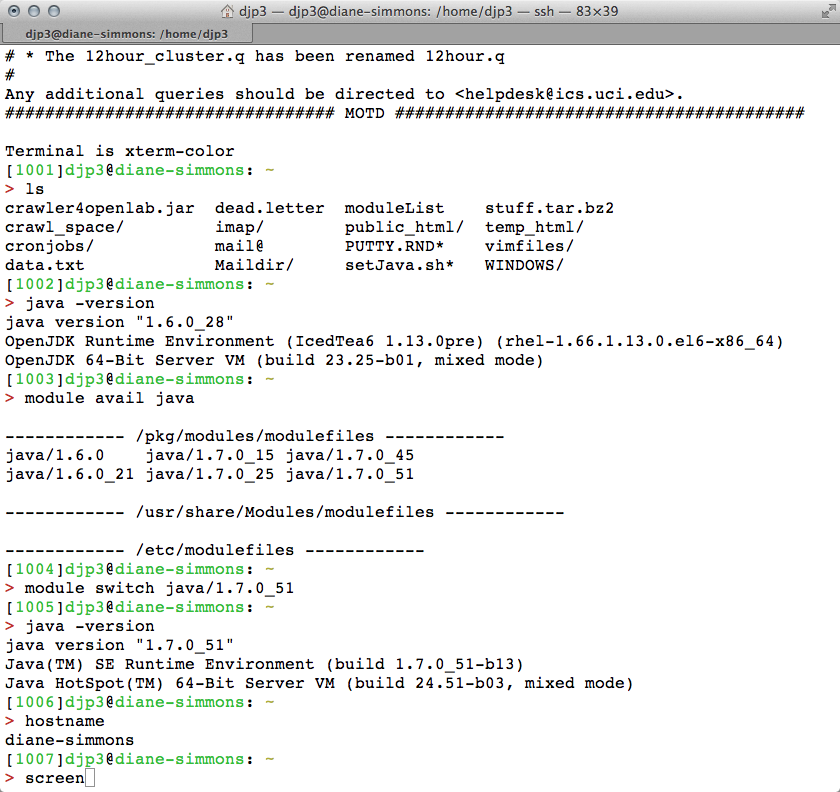

- Once there use "ls" to see if your jar made it okay

- Then make sure you are using the right version of java. If you aren't then use the module command to switch to java 1.7

- Note the name of the actual machine you connected to using "hostname"

- Then run the "screen" command to start a long-running process

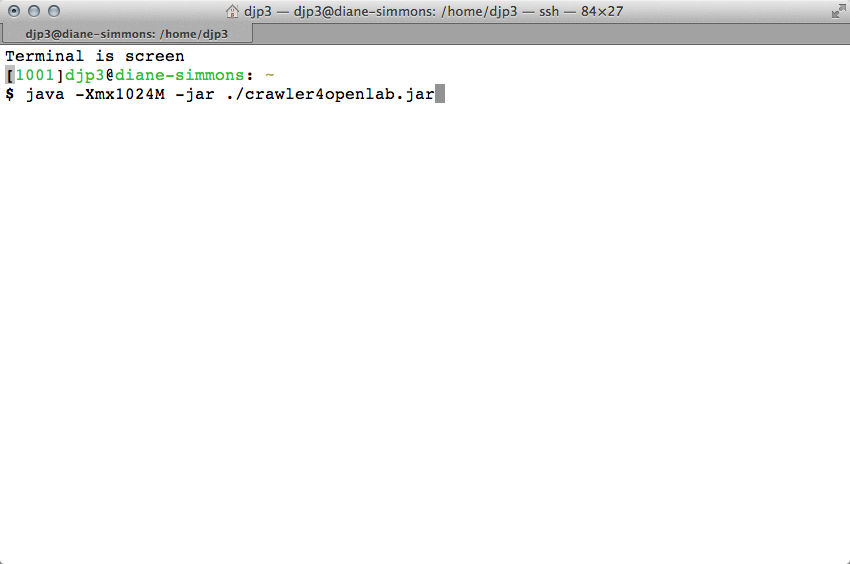

- "screen" will open up a virtual terminal that you can leave running after you log out

- Then use the command "java -Xmx1024M -jar crawler4openlab.jar" to start your crawler. The -Xmx command tells java how much memory to use while crawling. You shouldn't need too much because the frontier is kept on disk.

- Update: ICS supports is telling me that we should be using "nice -n 19 java -Xmx1024M -jar crawler4openlab.jar" That additional bit at the beginning makes our long-running processes defer to other short-running processes when there isn't enough CPUs to go around

- Once you are happy everything is going okay, detach from the screen with Ctrl-A then "d"

- This will send you back to the real terminal

- If you logout with the "exit" command then your crawler is still running away. You need to make sure you are monitoring it.

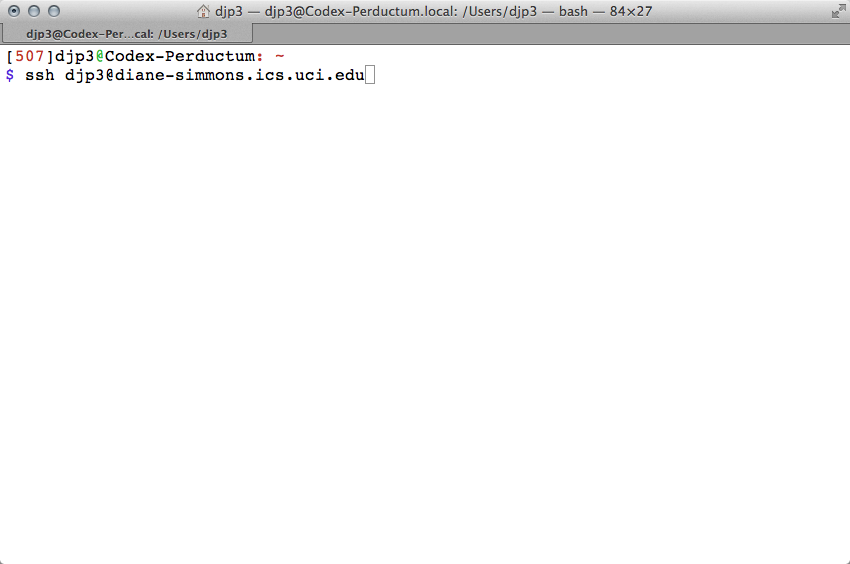

- To go back to it, log directly into the host that you were using:

- Once on the same host, type "screen -r" and you will reattach to the virtual screen that your crawler is running in. If you hit Ctrl-C it will kill your process, or you can just look at any output that you are generating. Hit Ctrl-A then "d" to detach again, as often as you like