Research: Project Highlights & References

Papers & code for selected research projects. See my complete publication list for more detail.

Memoized Variational Inference & Bayesian Nonparametrics

NSF CAREER Award

Hierarchical Dirichlet processes (HDPs) lead to Bayesian nonparametric mixture models, topic models, temporal models, and relational models. We develop a scalable family of variational inference algorithms that allows the number of clusters/topics/states/communities to be adapted online as data is observed.

- NIPS 2017: Patch-based HDP mixture models for natural image denoising and deblurring.

- arXiv 2016: Sparse posteriors for scalable learning of high-dimensional models.

- NIPS 2015: Memoized variational inference for HDP hidden Markov models.

- AIStats 2015: Memoized variational inference for HDP topic models.

- NIPS 2013: Memoized variational inference for DP mixture models.

- NIPS 2013: Stochastic variational inference for HDP mixed membership relational models.

- NIPS 2012: Stochastic variational inference for HDP topic models.

- BNPy: Bayesian nonparametric clustering in Python.

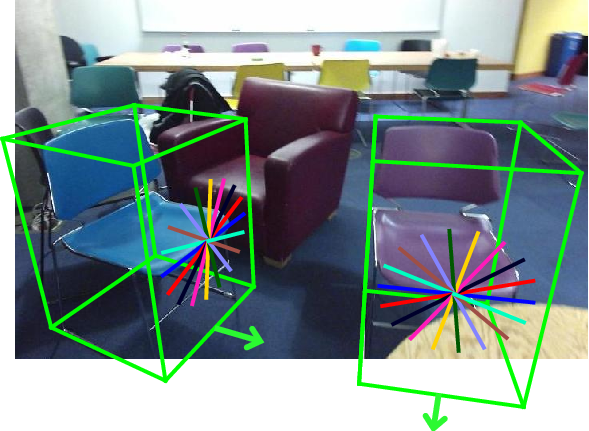

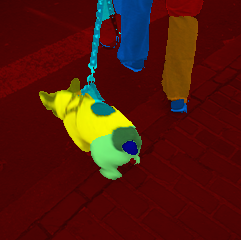

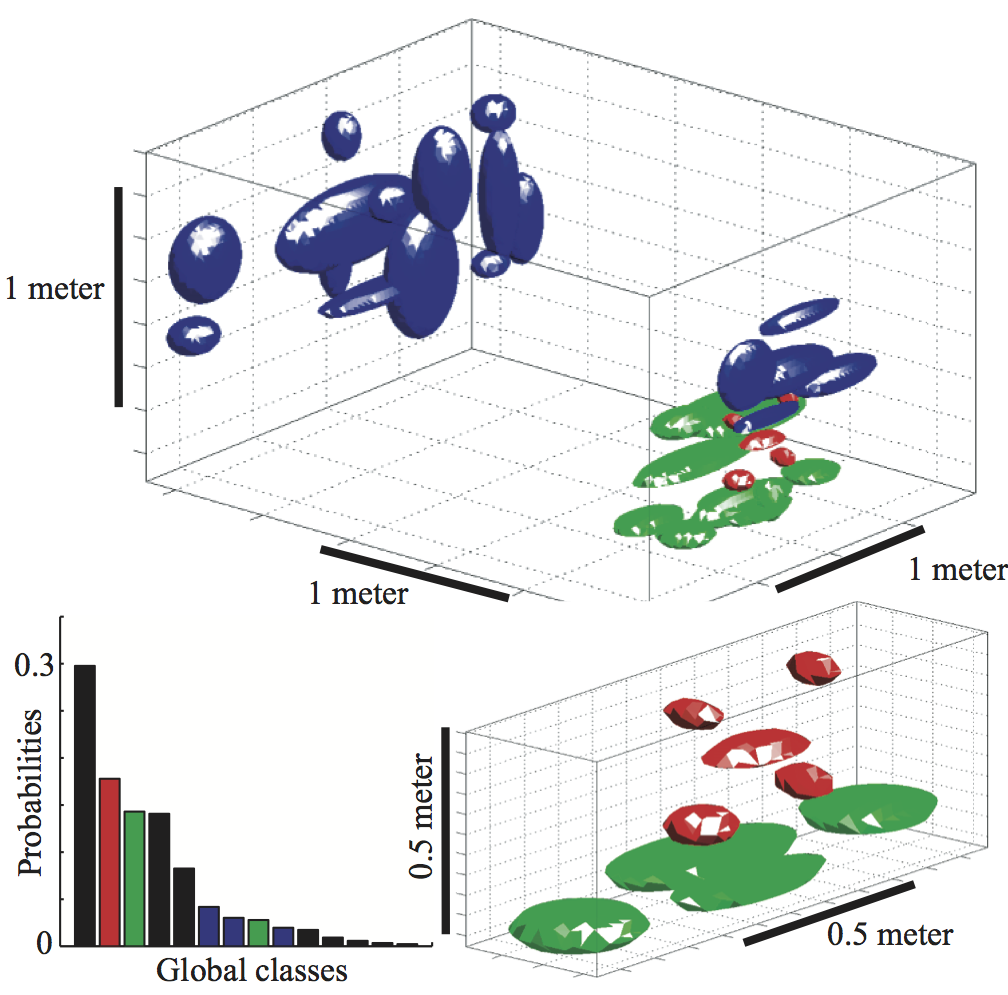

3D Object Detection & Scene Understanding

We use RGB-D images to learn contextual relationships between object categories and the 3D layout of indoor scenes. Our cloud of oriented gradient (COG) descriptor links the 2D appearance and 3D pose of object categories, accounting for perspective projection to produce state-of-the-art object detectors.

- PAMI 2019: Extended presentation of cascaded 3D detection framework integrating COG descriptors, support surfaces, and contextual cues.

- CVPR 2018: Support surfaces improve 3D detection accuracy, especially for small objects.

- CVPR 2016: Contextual cascades for indoor scene understanding from COG descriptors.

- 3DV 2017: Improved estimation of dense scene flow (3D geometry and motion) using cascades informed by semantic segmentations, with applications to autonomous driving.

Diverse Particle Belief Propagation

NSF Robust Intelligence Award

A fusion of max-product belief propagation and particle filters which reliably finds modes of continuous posterior distributions. A submodular optimization algorithm replaces the fragile stochastic resampling of standard particle methods. Applications include human pose estimation and protein structure prediction.

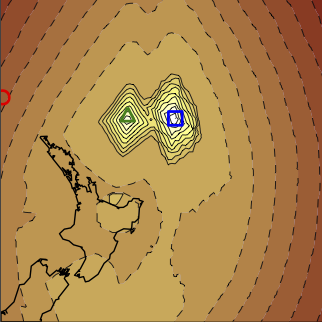

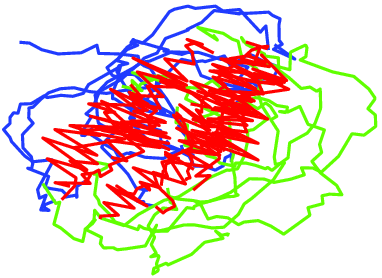

Vertically Integrated Global Seismic Monitoring

2014 ISBA Mitchell Prize for Bayesian analysis of an important applied problem

The automated processing of multiple seismic signals to detect and localize seismic events is a central tool in both geophysics and nuclear treaty verification. Our Bayesian seismic monitoring system, NET-VISA, is learned from historical data provided by the UN preparatory commission for the comprehensive nuclear-test-ban treaty organization (CTBTO). We reduce the number of missed events by 60%.

- News coverage: Brown University and Brown CS.

- Bulletin Seismological Soc. America 2013: NET-VISA generative model, inference, & validation.

- AAAI 2011: Short summary highlighting NET-VISA model & results (Nectar track).

- NIPS 2010: Original conference presentation of NET-VISA model.

- UAI 2010: Monte Carlo inference for related open-universe probabilistic models.

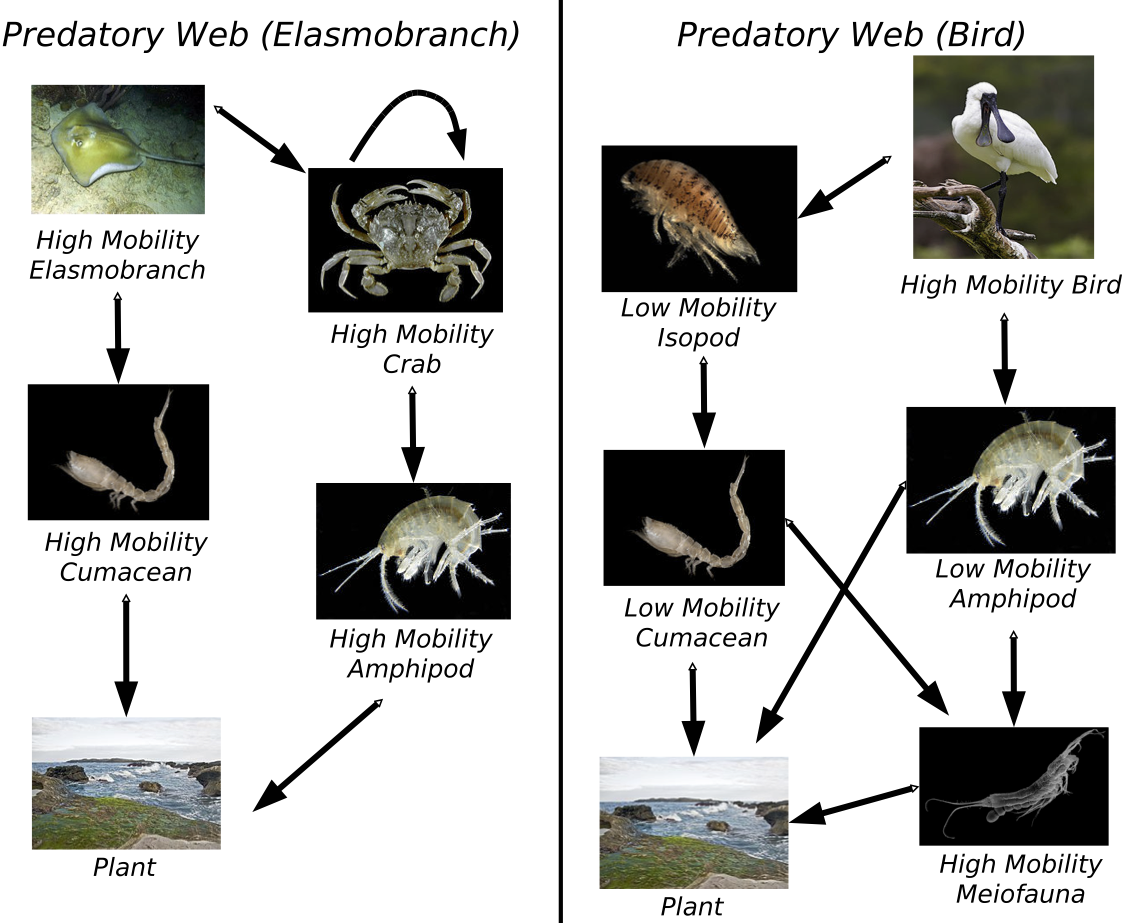

Layered Video Segmentation & Motion Estimation

Layered models simultaneously segment scenes into regions of coherent structure and estimate dense motion (optical flow) fields. By explicitly modeling occlusion relationships, and designing sophisticated CRF priors, we achieve state-of-the-art motion estimates and interpretable segmentations.

- CVPR 2015: Layered estimation of 3D motion (scene flow) from RGB-D videos.

- CVPR 2013: A densely-connected layered prior for segmenting foreground & background motion.

- CVPR 2012: Discrete optimization for effective inference of multiple flow layers.

- NIPS 2010: Original formulation of layered optical flow model for image sequences.

- CVPR 2012 & NIPS 2008: Layered Pitman-Yor processes for static image segmentation.

- Software: D. Sun's Matlab code for dense motion estimation and layered segmentation.

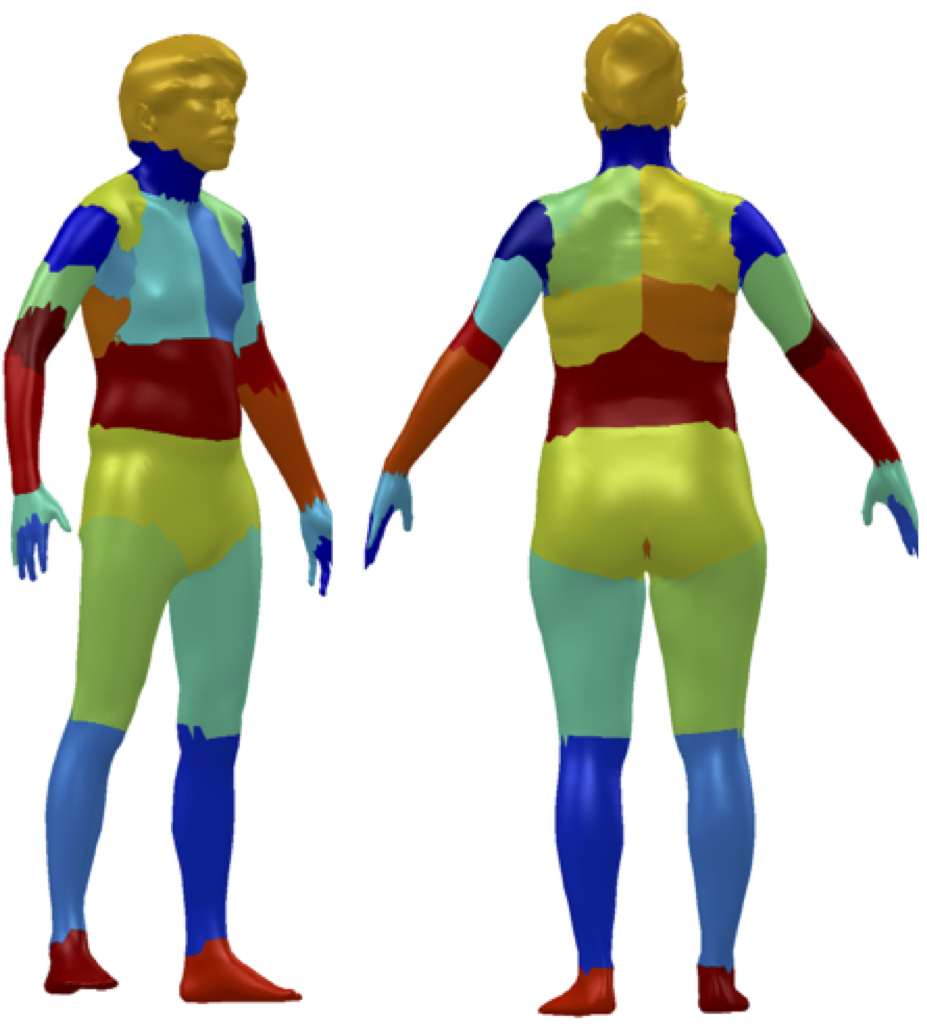

Distance-Dependent Hierarchical Clustering

The distance dependent Chinese restaurant process (ddCRP) is a flexible nonparametric prior for data clustering. Using a hierarchical generalization of the ddCRP and spatio-temporal distance measures, we use MCMC learning to segment text, image, video, and 3D mesh data.

- NIPS 2015 Workshop on Bayesian Nonparametrics: Learning ddCRP affinities via approximate Bayesian computation (ABC).

- UAI 2014: A hierarchical ddCRP for grouped data is applied to video & discourse segmentation.

- NIPS 2012: Deformation-based 3D mesh segmentation applied to human motion analysis.

- NIPS 2011: Natural image segmentation with spatial ddCRP models.

- Software: S. Ghosh's Matlab code for deformation-based segmentation of 3D mesh data.

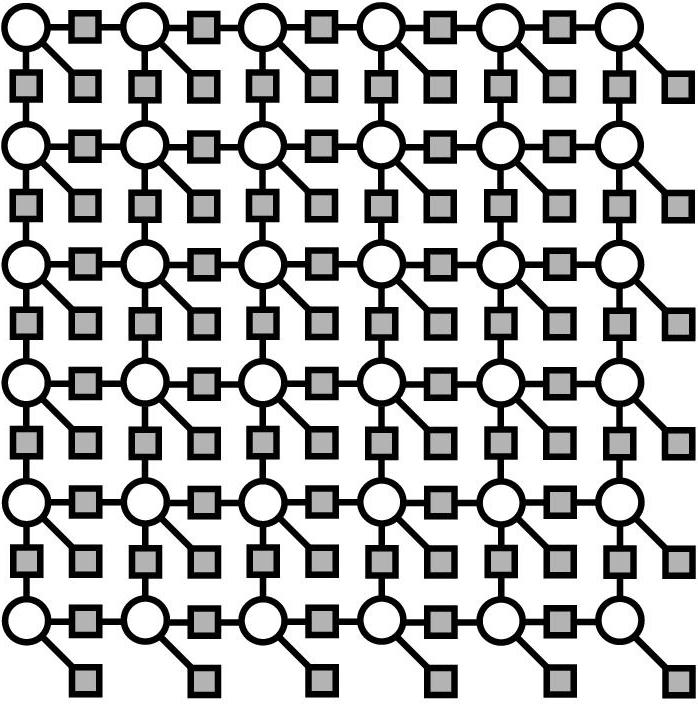

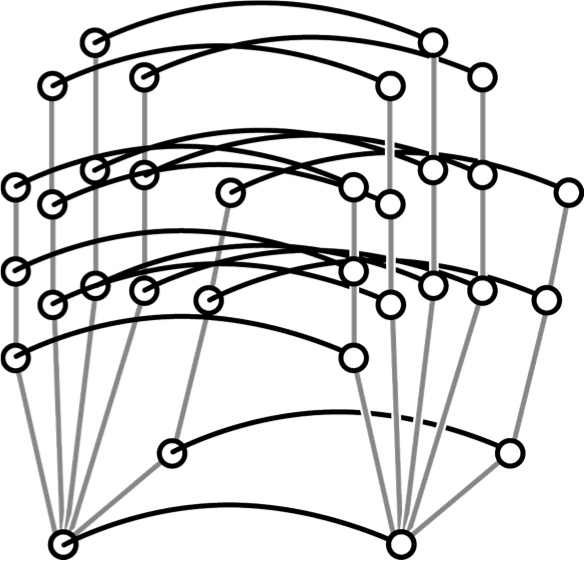

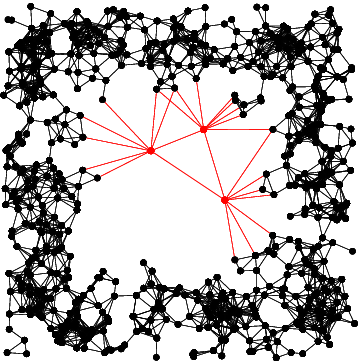

Loopy Belief Propagation

Loopy belief propagation (BP) is an often highly accurate approximate inference algorithm for probabilistic graphical models with cycles. We have contributed to the theory of loopy BP, written tutorial articles, and developed improved optimization algorithms for the associated Bethe variational objective.

- IEEE Signal Processing Magazine 2008: A tutorial introduction to BP for signal & image processing.

- NIPS 2012: Convergent optimization of the Bethe variational approximation underlying loopy BP.

- NIPS 2007: Loop series analysis of BP for attractive graphical models.

- Allerton 2001: A projection algebra analysis of BP decoders for low density parity check codes.

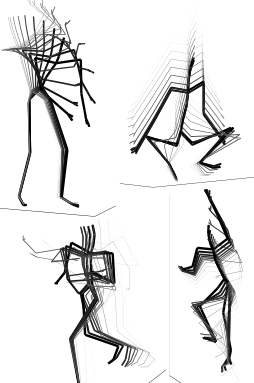

Beta Process Hidden Markov Models

Using infinite feature representations derived from the beta process, this Bayesian nonparametric model discovers a set of latent dynamical behaviors, and uses them to segment a library of observed time series. Applications include human activity understanding from video or motion capture data.

- Annals of Applied Stat. 2014: Split-merge MCMC learning of BP-HMMs from motion capture data.

- CVPR 2012 Workshop on Perceptual Organization in Computer Vision: BP-HMM models of video sequences represented by spatio-temporal interest points.

- NIPS 2012: Original conference presentation of split-merge MCMC for the BP-HMM.

- NIPS 2009: Original conference presentation of BP-HMM model. NIPS oral presentation.

- Software: M. Hughes' Matlab code for split-merge MCMC learning of BP-HMMs.

Hierarchical Dirichlet Process Hidden Markov Models

The sticky hierarchical Dirichlet process HMM allows an unbounded number of latent states to be learned from unlabeled sequential data. By capturing the "sticky" temporal persistence of real dynamical states we learn improved models of financial indices, human speech, and honeybee dances.

- MIT news 2008: Article describing applications of switching models.

- Signal Proc. Magazine 2010: Survey of switching nonparametric temporal models.

- Annals of Applied Stat. 2011: MCMC learning of sticky HDP-HMMs for speaker diarization.

- IEEE Trans. Signal Proc. 2011: Switching linear dynamical systems with unbounded latent states.

- IFAC System ID 2009: Learning sparse switching dynamics via automatic relevance determination.

- Information Fusion 2007: Application to tracking maneuvering targets with switching dynamics.

- NIPS 2008: Original conference presentation of HDP switching linear dynamical system.

- ICML 2008: Original conference presentation of sticky HDP hidden Markov model.

- Software: E. Fox's Matlab code for MCMC learning of HDP-HMMs. Variational learning in BNPy.

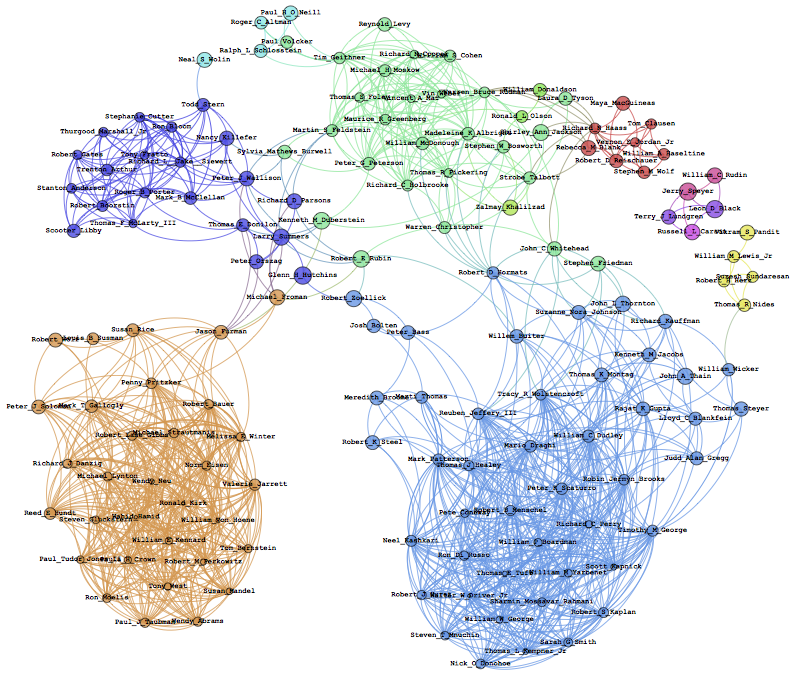

Doubly Correlated Nonparametric Topic & Relational Models

A stick-breaking representation allows Bayesian nonparametric learning of an unbounded number of topics from text data, or communities from network data. We learn correlations in topic/community membership, and better predict relationships by exploiting document/entity metadata.

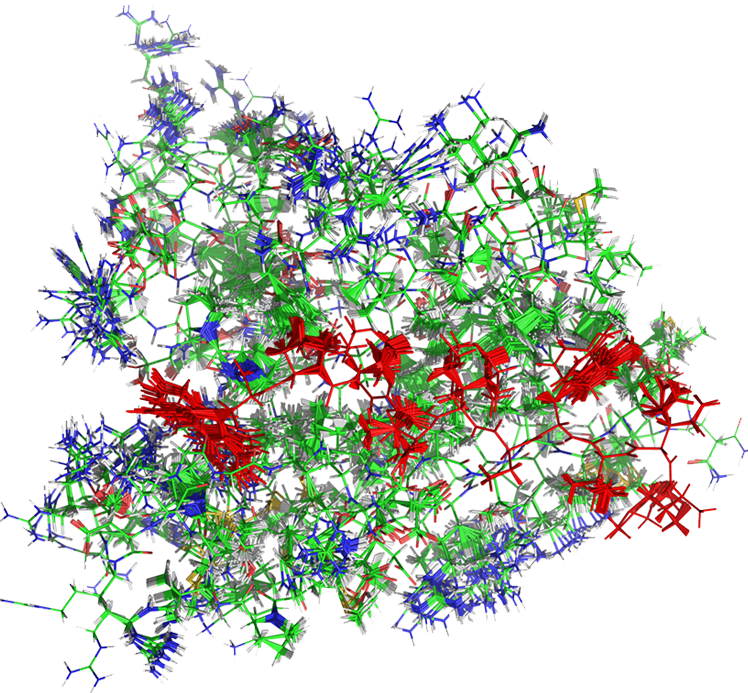

Transformed Dirichlet Process Models of Visual Scenes

Hierarchical probabilistic models for objects, the parts composing them, and the visual scenes surrounding them. We capture geometric context via spatial transformations, and use hierarchical Dirichlet processes to learn new objects and parts from partially labeled images or stereo pairs.

- Int. Journal of Computer Vision 2008: TDP models & MCMC inference for 2D images.

- CVPR 2006: Nonparametric 3D scene understanding from stereo image pairs.

- NIPS 2005: Original conference presentation of nonparametric TDP model.

- ICCV 2005: Earlier parametric model for scenes with fixed numbers of objects and parts.

- MIT PhD Thesis, May 2006: Additional technical background and experiments.

- Software: Matlab code for MCMC learning of TDP models. Code & image data (372mb).

Nonparametric Belief Propagation

Nonparametric belief propagation (NBP) generalizes discrete BP to graphical models with non-Gaussian continuous variables, using sample-based marginal approximations inspired by particle filters. Applications include kinematic tracking of visual motion and distributed localization in sensor networks.

- Communications of the ACM 2010: A CACM research highlight introduced by Weiss & Pearl.

- CVPR 2003: Defines the NBP algorithm and applies to a part-based model of facial appearance. CVPR oral presentation.

- NIPS 2003: Efficient NBP message updates via multiscale, KD-tree representations.

- GMBV 2004 & NIPS 2004: Occlusion-sensitive tracking of articulated hand motion from videos. NIPS oral presentation.

- MIT PhD Thesis, May 2006: Additional technical details on 3D orientation estimation for tracking.

- IROS 2009: Tracking networks of mobile robots using noisy distance measurements.

- Software: A. Ihler's Matlab and C++ code for multiscale kernel density estimation and sampling.

Embedded Trees & Gaussian Graphical Models

An inference algorithm for Gaussian graphical models that exploits embedded, tree-structured graphs. Generalizing loopy belief propagation, our embedded trees algorithm not only rapidly computes posterior means, but also correctly estimates posterior variances (uncertainties).

- IEEE Trans. on Signal Processing 2004: Embedded trees theory & experimentation.

- MIT SM Thesis, Feb. 2002: Additional technical detail and proofs.

- NIPS 2000: Original conference paper.